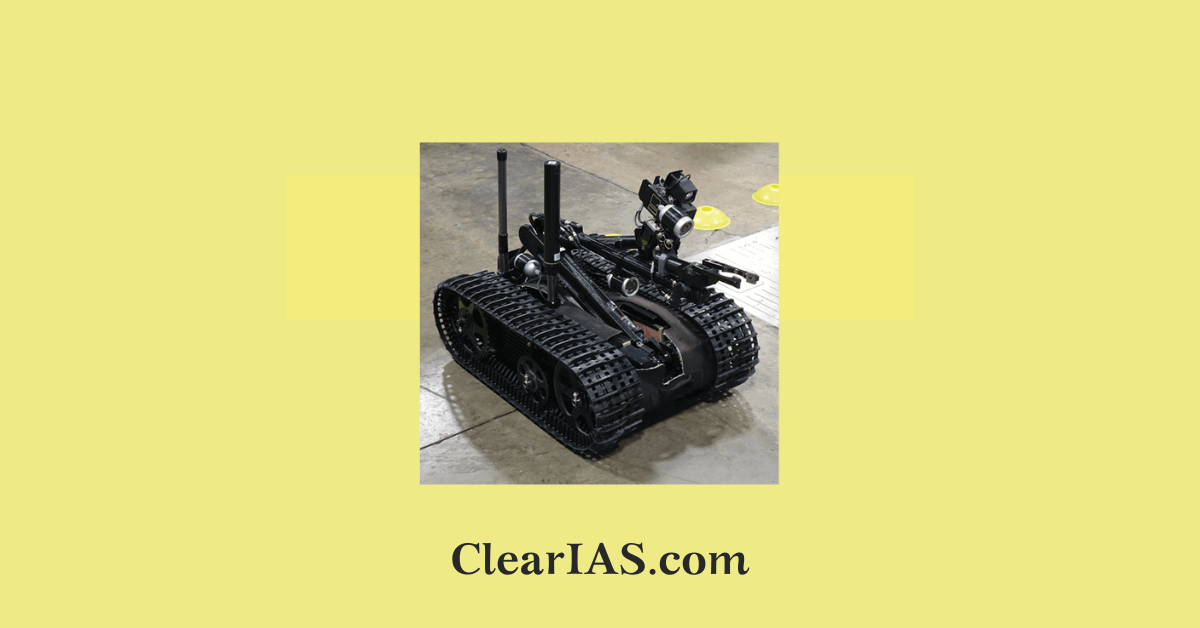

Lethal Autonomous Weapons Systems (LAWS) are a type of military technology that can make decisions about the use of lethal force without direct human intervention. These systems are often colloquially referred to as “killer robots.” Read further to learn more about them.

Recently, the United Nations’ First Committee approved a new resolution on Lethal Autonomous Weapons.

Even if an algorithm can determine what is legal under international humanitarian law, it can never determine what is ethical, the First Committee (Disarmament and International Security) heard after it approved a new draft resolution on lethal autonomous weapons systems.

At present, no commonly agreed definition of Lethal Autonomous Weapon Systems (LAWS) exists.

The development and deployment of LAWS raise ethical, legal, and security concerns, leading to ongoing debates at international forums.

Lethal Autonomous Weapon Systems (LAWS)

LAWS are designed to operate without continuous human control. These systems use artificial intelligence (AI) and other advanced technologies to identify and engage targets, make decisions about the use of lethal force, and carry out military operations.

- The most common types of weapons with autonomous functions are defensive systems. This includes systems such as antivehicle and antipersonnel mines, which, once activated, operate autonomously based on trigger mechanisms.

- Newer systems employing increasingly sophisticated technology include missile defense systems and sentry systems, which can autonomously detect and engage targets and issue warnings.

- Other examples include loitering munition (also known as suicide, kamikaze, or exploding drone) which contains a built-in warhead (munition) and waits (loiter) around a predefined area until a target is located by an operator on the ground or by automated sensors onboard, and then attacks the target.

- Land and sea vehicles with autonomous capabilities are also increasingly being developed. Those systems are primarily designed for reconnaissance and information gathering but may possess offensive capabilities.

These systems first emerged in the 1980s; however, their systems functionalities have since become increasingly sophisticated, allowing for, among other things, longer ranges, heavier payloads, and the potential incorporation of artificial intelligence (AI) technologies.

Potential Advantages:

Proponents argue that LAWS could have potential advantages, such as faster decision-making, reduced human casualties, and improved precision in targeting. Advocates also suggest that autonomous systems could be programmed to adhere strictly to international humanitarian law.

Role of AI in LAWS

Autonomous weapons systems require “autonomy” to perform their functions in the absence of direction or input from a human actor.

- Artificial intelligence is not a prerequisite for the functioning of autonomous weapons systems, but, when incorporated, AI could further enable such systems.

- In other words, not all autonomous weapons systems incorporate AI to execute particular tasks.

- Autonomous capabilities can be provided through pre-defined tasks or sequences of actions based on specific parameters, or through using artificial intelligence tools to derive behavior from data, thus allowing the system to make independent decisions or adjust behavior based on changing circumstances.

- Artificial intelligence can also be used in an assistance role in systems that are directly operated by a human.

- For example, a computer vision system operated by a human could employ artificial intelligence to identify and draw attention to notable objects in the field of vision, without having the capacity to respond to those objects autonomously in any way.

Ethical and Legal Concerns

The development and deployment of LAWS have raised significant ethical and legal concerns.

- Critics argue that the lack of direct human control raises questions about accountability, responsibility, and the ability to ensure compliance with international humanitarian law, including the principles of distinction, proportionality, and military necessity.

- The complex nature of AI algorithms and the unpredictability of conflict scenarios pose risks of unintended consequences.

- There is a global worry about the potential for LAWS to make incorrect decisions, target civilians, or escalate conflicts without appropriate human oversight.

- Concerns have been raised about the dehumanization of warfare when lethal decisions are delegated to machines. It is argued that removing the human element from decision-making may result in a lack of empathy, accountability, and ethical judgment.

Several campaigns, such as the Campaign to Stop Killer Robots, have emerged to advocate for a ban on fully autonomous weapons. These campaigns emphasize the need for human control over critical decisions in the use of force and the prevention of the development of weapons that could operate without meaningful human control.

Global Stand on LAWS

The international community has engaged in discussions on LAWS at various forums.

These discussions include the Convention on Certain Conventional Weapons (CCW) within the framework of the United Nations. Some countries and non-governmental organizations advocate for a preemptive ban on fully autonomous weapons, while others argue for a more cautious and controlled approach to their development and use.

Since 2018, United Nations Secretary-General António Guterres has maintained that lethal autonomous weapons systems are politically unacceptable and morally repugnant and has called for their prohibition under international law.

National Policies

Some countries have taken steps to address concerns related to LAWS through national policies. For example, some have declared their commitment to maintaining meaningful human control over weapon systems, while others have expressed support for the development of LAWS with appropriate safeguards.

China, Israel, Russia, South Korea, the United Kingdom, and the United States are investing heavily in the development of various autonomous weapons systems, while Australia, Turkey, and other countries are also making investments.

In 2018, Austria, Brazil, and Chile recommended launching negotiations on a legally binding instrument to ensure meaningful human control over the critical functions of weapons systems.

India’s stand on LAWS

At the UN General Assembly in October 2013, India supported a proposal to begin multilateral talks on lethal autonomous weapons systems.

India is exhibiting a practical attitude towards international affairs by maneuvering in the domain of autonomous weaponry.

- India understands the value of AI in national defense plans despite the UNGA rejecting its proposal for autonomous weapons, particularly in light of the country’s military superiority over China.

- Despite its capabilities in AI, India admits it is not as advanced as China and the United States when it comes to using this technology for military purposes.

Conclusion

The ongoing debates surrounding Lethal Autonomous Weapons Systems highlight the need for careful consideration of ethical, legal, and security implications.

Balancing the potential benefits of autonomous technologies with the need for accountability and adherence to international humanitarian law remains a complex challenge for the global community.

Related articles: Weapons of Mass Destruction; Directed Energy Weapons

-Article by Swathi Satish

Leave a Reply